LM Studio

Connect to LM Studio to run your AI models locally.

If you want to run your AI models locally, the popular LM Studio is a good solution. However, whether you can actually run the models depends on your hardware - we suggest trying it with 7B parameter models first.

If you run into issues, or need help to install LM Studio, you can consult the LM Studio documentation here.

In order to use LM Studio please make sure:

- The app is running on the same machine as Novelcrafter. LM Studio cannot be run within a network.

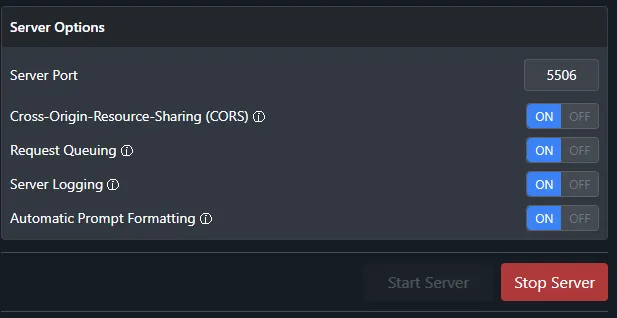

- You have enabled the CORS setting inside of LM Studio (see image below).

- The port matches the connection inside of Novelcrafter (this is 5506 by default). If it doesn’t work still, try a different port in case another program is interfering with the connection (try 1234 instead).

Don’t forget to start the server when you open LM Studio! Here is how it should look in LM Studio when you set everything up (if you use 5506 as the port).:

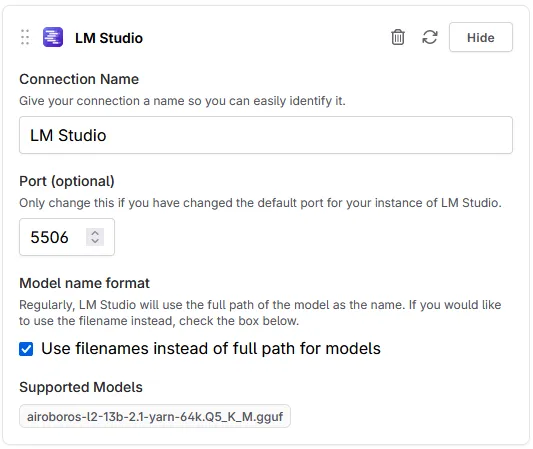

To integrate LM Studio with Novelcrafter, all you need to do is add the connection. The defaults should work without tweaking.

If everything is connected properly, your connection should look like this:

The model listed below ‘supported models’ will depend on the model that you have loaded into the machine.

Troubleshooting

If something goes wrong, you can try these steps. We will add more as our users encounter issues.

- Check if the Base URL is correct and includes the right port. (this seems to differ from the default).

- You might need to either reload Novelcrafter or the LM Studio connection (or reloading the tab, which is usually faster) for the server to be picked up. There is no auto-detection in the background to check if it’s up or not, so you need to do this manually.